As we mentioned in a previous post, we relied on the techniques discussed on the Nuigroup site to drive our table (see Nuigroup for specifics). They all use computer vision to solve the multitouch problem. In other words, the position of the fingers on the surface is tracked by camera.

A simple webcam can do the trick. However, it needs to be slightly modified to filter out visible light (so as to avoid capturing the projected image). Then,  via a process of frame differencing and the aid of various thresholding and image processing filters, you obtain a pure black (0) and white image (1) describing the position of the elements in contact with the surface. This is then used as the basis for the tracking.

In our case, we used a modified PS3 eye webcam, which is relatively cheap, and has some excellent frame rates (640×480 at 100fps).

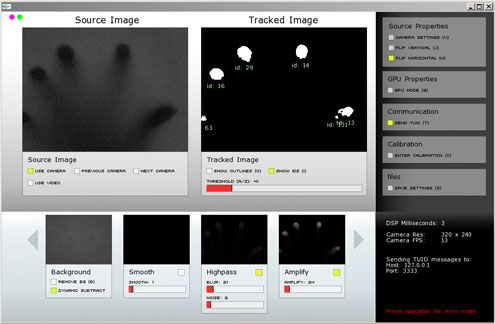

On the software side, we used Tbeta which is an open source tracking solution written with the openframeworks c++ library. Tbeta tracks the elements in contact with the surface and broadcasts their position and id over udp using the Tuio protocol.

Â

this shows the tbeta interface in action. On the left, the source image from the webcam. On the right the processed B/W Â image used for tracking.